It was promised, so it is due! 3 weeks ago, we have published here a brief for a Badsender campaign about our emailing training. This was an opportunity to show you how to work on an email marketing brief with something we did for ourselves. So 2 weeks ago, we launched the campaign. It's time to analyze the results and draw some conclusions!

The campaign!

Your devoted Thomas (who is no saint and who could not be accused of incredulity... we have the references we can) has concocted 3 beautiful emails using our usual newsletter template as a basis.

Here is what it looks like for the " Deliverability training " :

We're not going to spend too much time on the email design, so if you want to see how it looks, you can use the links to the Email On Acid tests of the campaign:

HTML Training Version for EmailEmailing strategy versionDeliverability Version

Basic" performance indicators

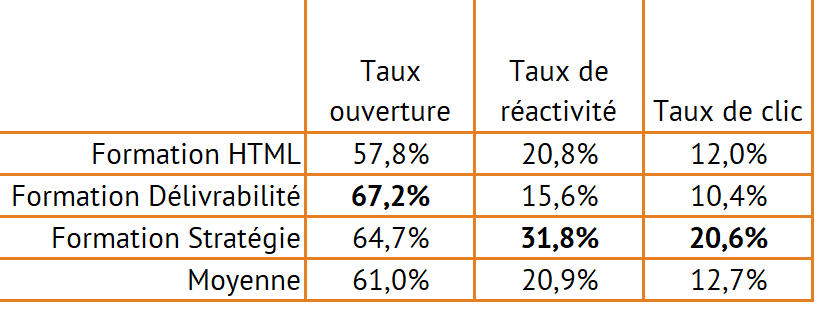

These are the classics! Open Rate (unique opens on receipts), Responsiveness Rate (unique clicks on receipts) and Click Rate (unique clicks on receipts). I specify the calculation method... because it's not always clear everywhere, in your various campaign management platforms, you may have slightly different formulas.

Before commenting, a little reminder about targeting, the email concerning the HTML training had priority, followed by the deliverability training, and finally only the emailing strategy training. With the game of priorities and exclusions, there was 5 times more audience for the "HTML" campaign than for the "Strategy" campaign. Don't forget that we are in B2B hyper-targeting, so we don't have huge volumes (this can be felt in certain percentages).

As for the numbers themselves, it seems that the "Deliver" course got the most attention in the inbox... but the least amount of clicks (always hard to know if it's the target that wins or the message). The "Strategy" training (smallest volume) seems to be the most successful overall.

By themselves, these numbers alone don't mean much. An average opening of 61% is obviously well above most market emailing benchmarksbut the only benchmark that should count is the one you develop internally to compare your campaigns (if you want emailing campaignswe know how to do it 😉 ).

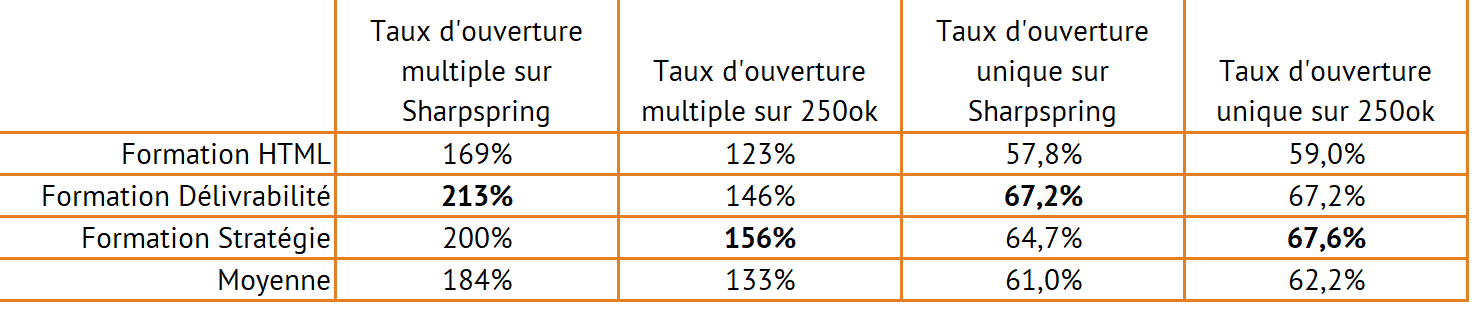

Let's compare our tracking sources, just for fun!

For its own emailing needs, Badsender uses (currently) a rather unknown marketing automation solution called Sharpspring from which we have retrieved most of the statistics. Moreover, we usually tag our emails with the Analytics module of 250ok (if you want more about 250okWe have published an article on this subject recently) which will allow us to get out behavioral information.

I have chosen to present this table mainly to show you the very important differences that there can be depending on your tracking sources. We can clearly see that on multiple openings, 250ok tends to record far fewer events than Sharpspring. Who is telling the truth? It's hard to know, even if 250ok uses a more complex tracking mode (we'll have to do an article on the subject), I would tend to say that it is closer to the real behavior of the recipient.

Otherwise, how do you read a multiple open rate? If we take the 200% rate which is very easy to read (Sharpspring for the "Strategy" training), it means that on average the openers of this email would have opened it twice. This is an important mark of interest for a message.

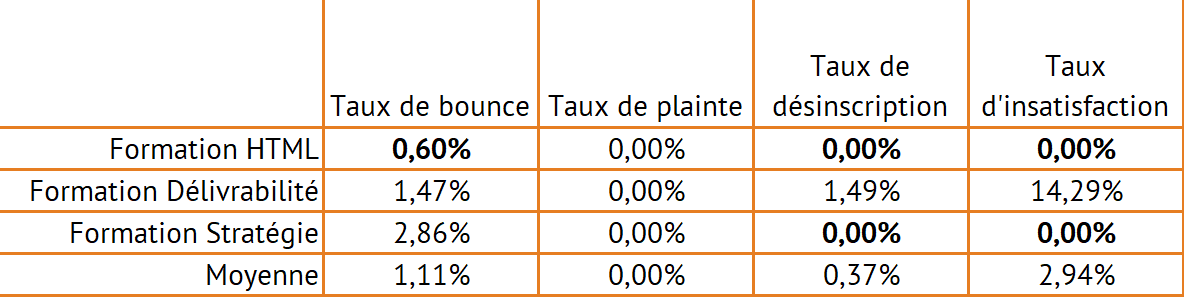

Technical" indicators

Bounce rate => OK! Complaint rate => Clear! Unsubscribe rate => Variable!

And the email dissatisfaction rate... you have to go read the article on the subject, but then, can do better on the email deliverability.

Need help?

Reading content isn't everything. The best way is to talk to us.

These technical rates should be followed closely if one goes awry... but if you do the job right and it doesn't, there's rarely much feedback to be had.

Finally, some really interesting numbers in this campaign!

The most interesting figures to follow concerning an email campaign are always related to its context and to the experimentation you do. The challenge of these 3 emails is to bring a personalized content to the recipient according to his profile and browsing history.

So in each email, you had one of the 3 courses that was highlighted, and the other two were present in a secondary way. The big question is: "Was it relevant?

In an ideal world, to verify the relevance of the method, we would have had to use test groups that would have randomly received the 3 creas. Unfortunately, with the low volumes available, we could not use this technique.

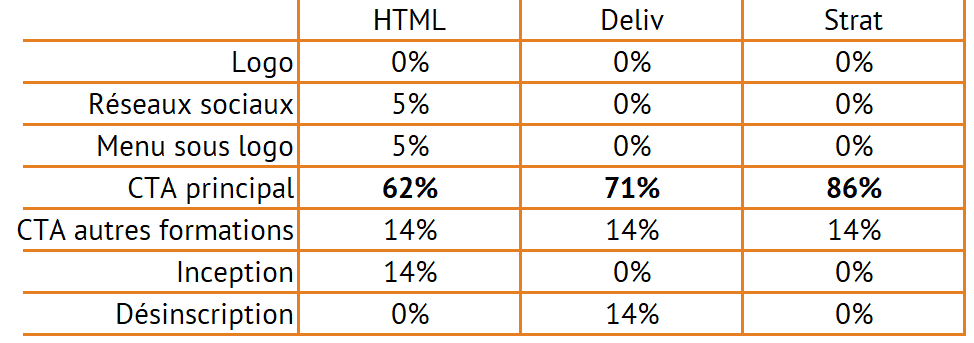

So we chose to check if the main call to action (CTA)... was indeed receiving the majority of clicks. If it didn't, we could have said that our targeting/perso was completely off. Obviously we're not bad at all! The main CTA is always (by far) the main click area... the CTAs dedicated to the other courses receive the second position.

Check the commitment of each version

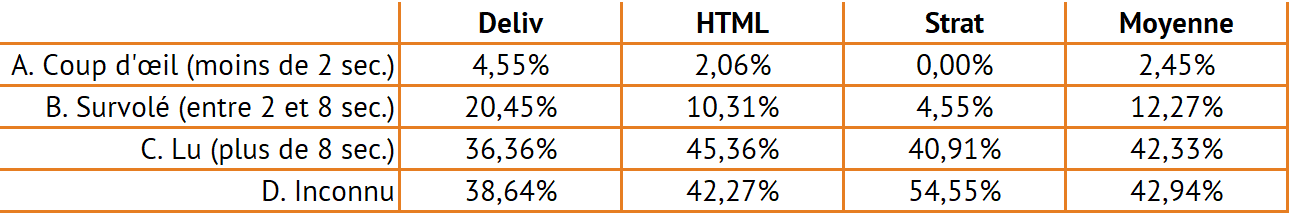

250ok's behavioral tracking allows to get various data on the recipient's attitude towards his emails. One of the techniques is to calculate the reading time of the email, this reading time is categorized in 3: Less than 2 seconds, it's a glance, between 2 and 8 seconds, it's an overview of the email and more than 8 seconds, it's a real reading.

The good news is that, apart from the "unknown" portion, the majority of openers stayed in front of the emails for more than 8 seconds. It is the "HTML training" campaign that seems to have interested the recipients the most.

What email reading environments?

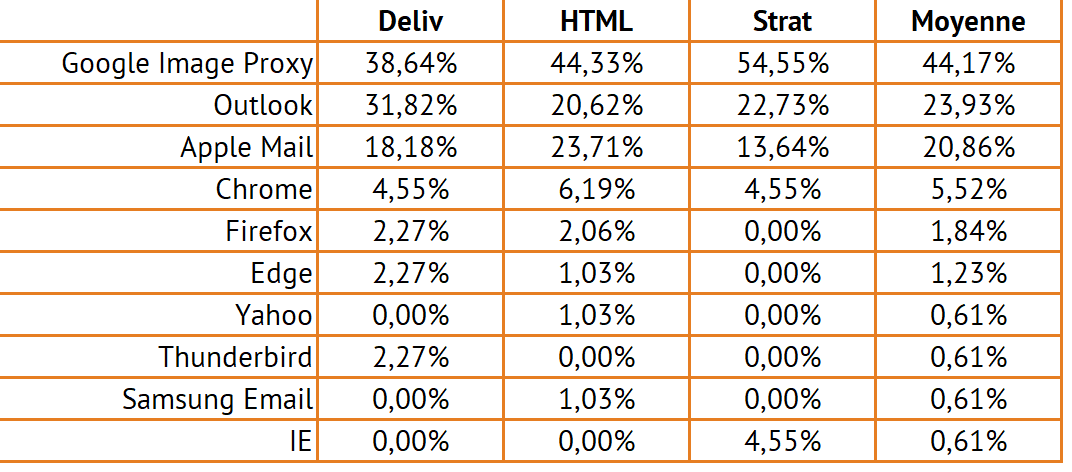

Here we are at 100% in the mise en abyme! Do email jobs open in different email clients? It was a funny question to ask (you could have the same desire for example with age groups). The answer is clearly yes!

If everyone opens mostly with Gmail (yes, Google Image Proxy, because Gmail temporarily saves all the images of your emails before displaying them to the recipients), integrators seem to prefer Apple Mail in second place, Outlook is successful among people interested in delivery (^^)... it was for fun... but crossing all these data can have a real interest.

What should we optimize for next time? Turn this ad-hoc campaign into an automatic campaign!

We're not going to give you an ROI calculation, but this campaign is a success, we sold trainings following its sending! If you think about it... with behavioral targeting, we could very well convert this campaign into automatic emails! x days after visiting a training page, if there was no commercial request, send an email automatically. But that's another story!

Feel free to use the comments below. We'd love to hear what you think about our approach!